“It is difficult to regulate something when its capabilities are evolving and hard to predict.”

Luca Sambucci, the Head of Artificial Intelligence at SNGLR XLabs AG

In the mid-20th century, the first AI tools, such as Eliza, appeared. Many feared tech would enslave humanity and take over our planet. But things have turned out far from that reality. So, no worries!

But the real danger came from where nobody expected it. Since ChatGPT, Midjourney, Sora, and Gemini emerged, governments have seen the need for stricter AI laws and new regulations due to AI’s vast impact on society. This technology affects almost every sphere of our lives: culture, medicine, jobs, businesses, and more.

In this context, evolving cybersecurity threats are a real danger. The Forrester report “Top Cybersecurity Threats in 2023” focuses on the weaponization of generative AI and ChatGPT for cyber attackers. With rapid AI tech advancement, cybercriminals have a playground and means for committing malicious acts against individuals and organizations.

Thus, the critical question arises: Is artificial intelligence good for society?

This article aims to find the answer to this question and explores the importance of government regulation of AI. Read on!

AI in 2024: The AI Landscape Overview

History

The first time people heard about artificial intelligence was in 1955 when John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon coined the term in their proposal. However, the official birthday of AI is considered 1956. Needless to say, artificial intelligence hasn’t turned out as people imagined it in pop culture, for instance, in the movie “2001: A Space Odyssey” or in the book “Erewhon” by Samuel Butler from way back in 1872.

In 1957, Cornell University psychologist Frank Rosenblatt presented the world with the first trainable neural network—the Perceptron. This tech was like modern neural networks. But, it had only one layer with adjustable weights and thresholds.

Interestingly, researchers at the University of Toronto developed an efficient convolutional neural network just recently in 2012. In the ImageNet Large Scale Visual Recognition Challenge, it had an error rate of just 16%. This was much better than the 25% error rate of the best entry the year before. Artificial intelligence, still in its infancy, is showing promising signs of rapid development and a bright future.

Let’s pause here for a moment and set the record straight. Our idea of AI is wrong. Although the British polymath Alan Turing tried to mathematically prove that it’s possible to create artificial intelligence with sufficient computing power and the right algorithms, it doesn’t exist today.

What we call artificial intelligence is actually neural networks. AI refers to intelligent machines that are just as smart or even smarter than humans. Neural networks are vaguely inspired by biological neurons but don’t possess the same creativity and unpredictability as the human brain. You can find more striking AI facts in our article.

The AI Market in 2024

The buzzword of the year is Artificial Intelligence. As we found out, AI is by no means a new concept. But from year to year, it is gaining more and more attention in direct proportion to its development. In 2024, in particular, companies using AI will gain technological dominance and geopolitical power. Why is this important? The reason is that AI companies will get more sales, better processes, and a competitive advantage, gaining a dominant position in the global market.

Let’s look at the figures. In 2024, the global AI market is valued at about $196.63 billion, compared to $95.6 billion in 2021. It’s estimated to hit $1.85 trillion by 2030. Such growth is logical due to multiple tech advancements from implementing automation and machine learning. Find out more AI predictions and trends for 2024 in our blog.

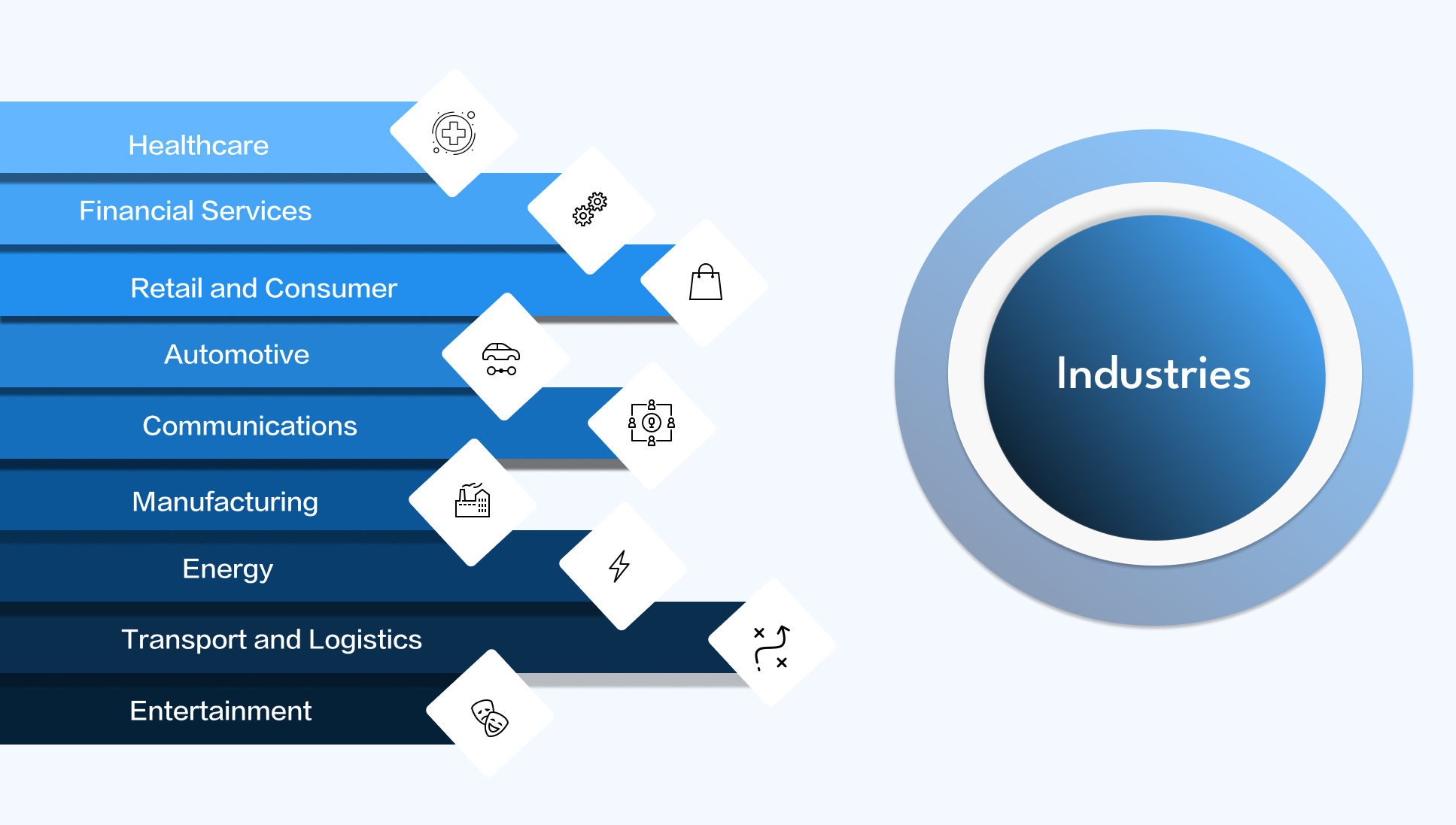

Industries

From finance to manufacturing, AI has penetrated diverse sectors with tailored applications. Mostly, AI is integrated into these industries:

- Healthcare

- Financial Services

- Retail and Consumer

- Automotive

- Communications

- Manufacturing

- Energy

- Transport and Logistics

- Entertainment

Artificial intelligence helps companies revolutionize their customer experience, automate processes, and improve decision-making. Thus, this technology ensures innovation, efficiency, and big potential. AI’s versatility allows it to be both a booster for behind-the-scenes algorithms and end-user integrations.

44% of companies implement AI for automation to cut down operational costs by speeding up operations and freeing up employees to deal with more important tasks that require human involvement. In the finance industry, artificial intelligence is the reason 80% of firms may significantly level up their revenues. In the retail industry, 88% of businesses plan to harness AI to automate their processes, and 38% of medical providers recognize the benefits of using computers to make diagnoses.

These statistics prove that artificial intelligence is designed to simplify business operations and make our lives easier. Although the use of this technology may not be a must in your company, it is a must-have to survive in the market. And though AI deployment brings many benefits to businesses, it also poses a potential threat. We’ll discuss this in detail in the next sections.

What are the Ethical Issues of Artificial Intelligence?

As we’ve already figured out, AI’s impact on our world is immense, but not always in desirable ways. AI risks like bias, discrimination, bioweapons, and cyber threats are becoming increasingly worrying with the development of artificial intelligence.

Even the OpenAI’s CEO, Sam Altman, agrees that AI-generated content poses significant dangers. He calls for AI regulation as it reshapes society. Altman states, “I’m particularly worried that these models could be used for large-scale disinformation. Now that they’re getting better at writing computer code, [they] could be used for offensive cyber-attacks.”

It is crucial to balance protecting against misinformation generated by AI and promoting innovation. In democratic countries, the AI risks can be much greater. At the same time, concerns about AI, including privacy and cyber danger, can limit the scope of research and activities of Western companies.

Authoritarian regimes don’t have such concerns about the challenges and risks of artificial intelligence regulations. They may use AI in ways democratic countries would never be able to, like getting and utilizing sensitive personal or biometric data. That makes it challenging to create effective AI regulation initiatives for the entire world.

The dichotomy between innovation and regulation is quite blurred and, therefore, often manipulated by businesses to avoid strict accountability. Yet, Amnesty International highlights the threats AI creates for mass surveillance, policing, and distribution of social benefits. The organization believes that artificial intelligence can increase discrimination and human rights violations, putting marginalized groups, such as migrants, refugees, and asylum seekers, at risk.

The main task for international organizations is to promote innovation while ensuring that AI does not jeopardize public safety and welfare and adheres to strict transparency and accountability measures.

Main AI Ethical Concerns

By prioritizing fairness, privacy, and accountability, we can shape AI’s responsible development and usage. Only in this way can we harness the full potential of this technology. But let’s find out what ethical AI concerns governments are trying to regulate.

AI and Bias

When we talk about AI regulation, bias is a big concern. Imagine the following: AI learns from tons of data, right? But what happens when that data is biased? Yes, AI can end up making biased decisions. Just think of unfair hiring, loans, and even the justice system. The ethical involvement of AI is about getting rid of bias and giving everyone an equal chance.

Privacy and Data Protection

Although AI requires a lot of information, it often causes serious security vulnerabilities. As AI becomes a significant factor in our everyday lives, we should consider how we can protect our data. We must find a middle ground between making the AI machine smarter and protecting individual privacy. It’s a balancing act.

AI Safety

As AI becomes increasingly intelligent, we should take steps to avoid it becoming overpowering. There are risks, and they are real, namely, weapons controlled by AI and the “silent” or hidden application of AI technology. AI is a powerful tool, but we have always collectively defined its rules and applications. We need to be back at the helm to ensure that it does not steer us against our interests. It’s like designing software; the penultimate will not cause a robot to rebel.

Accountability

The point is this: AI can make its own decisions. But if it screws up, who is to blame? We need to clarify who is responsible. We must also know what’s happening in AI brains to trust them. If we talk openly about how AI works, we can monitor things.

Employment Disruption

AI is making our lives easier and reshaping the job market. However, some people will become unemployed as AI takes over their jobs. There is a need for strategies that will help people learn new skills and make AI ethical and beneficial to all. We should ensure that no one is left out of the AI revolution.

Current State of AI Regulation

Even with the need for extra precautions to ensure that AI is safe, no one should fear innovation or believe a few big companies will monopolize its capabilities. A balanced approach to change is essential and should make room for new concepts and ideas.

Governments should regulate AI by setting up rules for AI systems while at the same time refraining from overburdening businesses with unjustified bureaucracy. By establishing ethical AI principles aimed at openness, transparency, and safety, we will allow everyone to play the game without the burden of rules weighing them down.

However, turning to a free market mechanism just to address AI’s ethics is not the best plan. Since AI is becoming intelligent very quickly and influencing public opinion, we need to set strict rules. Organizations, such as OpenAI, should be closely monitored to ensure that they are not acting irresponsibly when it comes to developing new technologies.

Policymakers must facilitate accessibility for all to keep things competitive. This means including start-ups in an ecosystem that embraces resources and aligns licensing with fair play and collaboration between newcomers and incumbents. Only when everyone is allowed in will different perspectives and positive competition emerge.

Building Ethical AI: Principles and Guidelines

As we continue to develop and harness artificial intelligence, we need to follow a specific list of ethical AI principles. They are the following:

- transparency

- fairness

- explainability

- data protection

- privacy

- non-discrimination

But how do you ensure your AI software follows all these principles? Here are some ideas for making your software ethical and addressing the main AI concerns.

Regulatory Frameworks

One of the AI ethical issues we should focus close attention on is rules from governments and regulators, ensuring that AI systems remain open, accountable, and secure. This allows both big players and regular people to get involved while operating strictly by the rules. When we vouch for responsible AI practices, everyone can be code owners, and nobody gets lost in the messy crowd of the rules.

Encouraging Competition and Collaboration

To keep things fair, we should ensure that everyone has a fair chance. That means we need to help students get interested in AI subjects, give them scholarships, and help universities fund AI projects. Giving scholarships to students and ensuring universities have enough funding for AI projects is about getting more people involved. If everyone can play, we all win!

Copyright

As for who owns what in the AI environment, it gets pretty tricky. Who has the rights to what the big AI models produce? There is a lot of controversy, mainly because of copyright issues when working on training these models.

For example, GPT-4 and Stable Diffusion are the big models that use large amounts of data for learning, and some of that data seems to be copyrighted. Therefore, there have been some legal disputes over who should own the data generated in the course of AI operations.

Privacy

Protecting privacy is a major concern, especially since AI needs enormous amounts of data to learn. There have already been some awkward moments, such as when a ChatGPT bug allowed users to see other people’s messages. Italy even shut down the application for a short time. This is forcing policymakers to think about how to keep our online information safe.

Algorithmic bias

AI can sometimes acquire bad habits from the data it learns. That’s why some people try to prevent AI from distinguishing specific kinds of people. There are ways for companies to screen their AI for possible bias and get regulators to do this kind of work. After all, AI learns from a lot of data, and if the data is biased, the AI may also make biased decisions at key stages. For this reason, some company administrators are discussing rules to prevent AI from discriminating.

Licensing Requirements

Artificial intelligence leaders say we need to be as careful with artificial intelligence as we are with drugs or driving, which are strictly controlled by the authorities—imagine that! Sam Altman, CEO of OpenAI, believes that opening up AI models with superpowers should not be done without prior licensing.

Jason Matheny, President of the Rand Corporation, believes we need a system that keeps AI models on the right track. Gary Marcus, a well-known AI personality, encourages us to follow the FDA’s lead and test AI before it’s free. It’s about ensuring the safety of AI without destroying the world in the process.

“Compute” regulation

It takes a lot of computing power to train AI. However, some believe that we should be careful about who invests in these huge crisps and what they actually do with them. It’s kind of like an AI watchdog doing work that could pose a threat to AI.

Yonadav Shavit, a researcher and scientist at Harvard, believes that the world should keep an eye on the buyers of high-performance crisps for AI. It’s kind of like hacking the processor chip of a smartwatch that has something to do with AI.

Proposed AI Regulatory Standards Frameworks

There are no official laws for artificial intelligence yet, but some cool ideas have already been thrown around. For example, the Biden administration has talked about an AI Bill of Rights and the so-called AI risk management framework. They give us some good tips on how to play it safe with AI, but here’s the catch: they’re not laws. Thus, companies aren’t forced to follow them.

Now, let’s look at some of the AI regulatory standards frameworks that exist in 2024.

Open-Souce AI

Some people say we should handle AI like open-source software that anyone can use for free (kind of like Wikipedia and Linux). But trying to regulate or even ban open-source stuff is a difficult task. How can you even control it? There is also the concern that too strict AI regulations will stifle innovation.

Let’s talk about Europe, where some great AI start-ups, such as Mistral AI and Stability AI, exist. The EU is thinking about new rules called the AI Act. One concern is making sure that these rules don’t stifle these startups. Europe could become the next big AI hotspot, like Silicon Valley, but only if they play their cards right.

The AI Act

The AI Act is a big deal because it could become the regulation for AI not just in Europe but potentially worldwide. The EU’s main priority is to review AI applications in airplanes, cars, and medical devices to ensure that AI is accurate and unbiased.

Companies selling into Europe would have to adhere to these strict rules, which, in turn, would also impact competing standards in other countries. However, it’s not just about following the rules. It’s about finding the right balance between crossing the line and protection.

Self-regulation

Another new idea in the EU is mandatory self-regulation for foundation models. These are the big players in AI, so to speak. They say that developers must disclose everything about how their models work. It’s about being transparent. However, some fear this could slow innovation, especially for smaller companies trying to keep up.

Europe is trying to find the happy medium between protecting artificial intelligence and allowing it to expand. It’s a balancing act. They want to be at the forefront of artificial intelligence while ensuring they don’t trip over their own rules. A difficult task, isn’t it?

Conclusion

To summarize, AI is developing rapidly, but with the benefits come many risks to society. As more industries and companies adopt processes or build their own tailored AI software, it’s clear that we need AI regulations to eliminate bias, discrimination, and unlawful use of this technology.

The problem is that creating and adopting regulations comes with many AI ethical issues. Thus, it’s not an easy task. Yet, there are many AI regulation frameworks that aim to ensure the safe use of this technology. When harnessing the power of artificial intelligence, make sure you follow the principles and guidelines mentioned in this article. If you have any questions on how to create an ethical AI, contact our experts!